This blog post is the second part of our Detection as Code (DaC) challenges series. You can read part one here.

The development process of detections by itself doesn't pose a lot of barriers for security engineering teams, as they are typically done in a lab/controlled environment, but after tuning and deploying rules to a SIEM, the work is only starting. Many things can go wrong after this, and a process of continued and automated testing is crucial.

Detection Validation

In an ideal (and fictional) world, once the datasets are parsed, normalized, and put into production, detections developed by your team would work forever. Still, the reality is quite different. Maintenance is heavy work that needs to be done frequently - especially if you work on an MSP - but the reality is that the ecosystem lacks tooling and processes to do it proactively. Effectiveness is an important metric and crucial to the successful response of incidents in time, and effectiveness is what we aim to ensure when constantly validating detections.

Change is inevitable

Changes occur daily in a modern enterprise, and some are not that well managed. If they are internal, it's hard to get all the relevant people involved in the change request.

Some common examples are:

- Changes in log formats

Products are frequently updated and as some require custom parsers due to their unstructured format, these updates can easily break the parsing, breaking or affecting the detections.

- The monitored system doesn't have the required configurations applied

Some detections need custom audits and policies to work. And sometimes, this is not defined in the baseline policies.

- Software Bugs

New versions of the products used on our detection pipeline can introduce bugs that will cause errors in our receiving/indexing pipeline.

Aiming to identify changes that negatively impact the effectiveness of detection rules, we've been working for the last few months on processes and tools to make that process future-proof and as automated as possible.

Introducing Automata

Automata is a tool to detect errors early and measure the effectiveness of SIEM rules against the behaviors that the rule was developed to work against, ensuring that the whole process of data collection, parsing, and query of security data is working properly and alert when things don't work as intended.

Automata supports Elastic (both Cloud and On-premise), and uses MITRE Caldera to simulate the adversary behaviors that are intended to be detected by the SIEM rules.

The Process and Adoption

Automata can be embedded in the CI/CD process or be used in daily/weekly assessments in order to detect errors and problems as soon as possible.

Adopting the practices that Automata is built to consume - like developing abilities for every detection developed and deployed - is not the simplest task, but it pays in the long run. More than just the validation it will help with the detection engineering itself, as the needed research will drive your team to a repeatable process of simulating the hypothesis, that will assist in the review and further development.Caldera uses the YAML format for its abilities, allowing us to add custom fields as we see fit. In our case, the mapping between the Ability and the Alert is done using the rules field:

This allows us to list all the detections we’d like to cover and test in a particular Caldera ability.

Automata will then check if the specified Sigma rule exists in the provided path, extract the alert name, and check whether it exists or not in the SIEM.

The Result

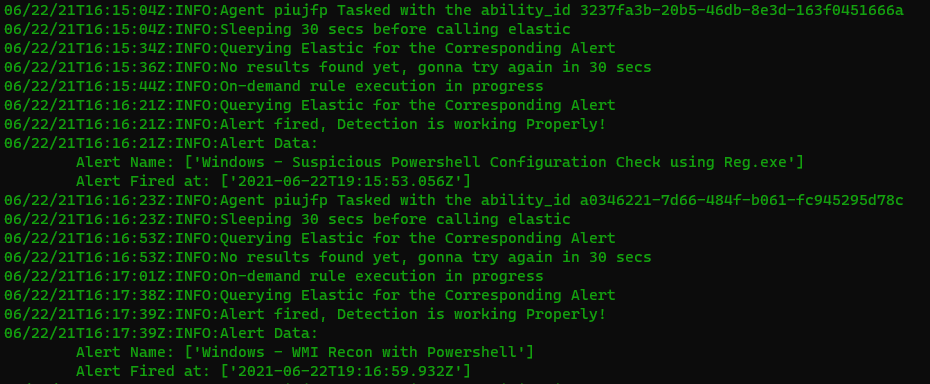

Upon execution, Automata will task the specified agent with the Ability to be executed for a given alert, start querying the SIEM and then execute the query every 30 seconds until it finds a corresponding alert or exceeds the specified time limit.

For now, the results contain three statuses:

- Success - Alert is being executed with no SIEM errors and returned a match during the testing

- Failed - Alert is being executed with no SIEM errors but didn't returned a match during the time limit, needs manual review

- Bad Rule Health - Alert has execution errors on SIEM, needs manual review, the simulation was skipped

At the end of the execution Automata will generate a simple PDF report containing the results of its execution as well as some simple metrics. It will also include a CSV file that can be used to generate your own report in Excel or import into the SIEM itself.

Here is an example report:

Upon execution, the output of the script will look like this:

On this first version, although already usable, Automata is a proof-of-concept that will let us know if we are in the right direction and establish a foundation process for teams willing to adopt Detection Validation as part of their detection engineering process. A lot of adjustments are planned as well as support for additional technologies.

Feedback

Feel free to reach out to us on Twitter or consider joining our Community Slack. We'd love to hear what you think of Automata. Visit the project on Github for more information.